Code Reuse Does Not Mean Copy and Paste

Pay attention - I'm only going to say this a few times.

DRY

was the most important programming principle I've ever learned.

Was there a major turning point in your software development career? One occurred for me, (I often half-joke)

when I learned that "code reuse" did

not mean copy and paste.

The technique of taking prior art, text, or symbols and rearranging them into something new and

valuable may work for

artists and

spammers

(or not, if you're

Tristan Tzara at a 1920s Surrealist rally),

but it's no way to write a program.

(The reuse part is fine of course. It would be moronic (

but sometimes not) to build systems which consist

of previously written components that could have been reused. Even in the exceptional case, it's not

often you'll need to rewrite

everything.

Can you imagine the absurdity of academic research publications if they were unable to build upon prior

findings?

Whatever, enough of the justification. I hope we're in agreement that copy-and-paste code-reuse

is about the most evil thing you can do

to maintenance programmers. If you want to punish them, you don't send them to hell.

You duplicate as much buggy code as you can. Even better if it looks like it has the reproduction ability of the fruit fly.)

So back to copy-and-paste reuse. It's not what people mean when they say you should reuse code, or

when they tell you to write code

that

is reusable. It took me a while to

become cognizant of that fact.

Because of my experience in the land of cut-and-paste,

I've always wanted to write a program that would root out code that isn't "DRY," and then just point and

laugh. Something to help it dry off. A towel for your code, if you will.

Because of that, I was pretty excited when

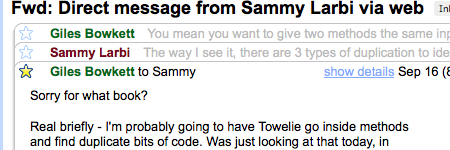

Giles Bowkett announced

Towlie, a Ruby library for keeping your code DRY.

I wanted to hack away at it that weekend. Unfortunately,

Hurricane Ike had other plans for me.

However, I did get to take a

look at the source code before our power went out, and I had an email discussion with Giles after the power

came back on.

I wanted to share with you some of the ideas we talked about in our discussion (his email used with permission, of course).

Three Types of Repetition To Detect

The way I see it, there are three types of duplication to identify

(I'm not claiming there are

only three, just that I only

thought of three).

- Duplicate methods, which Towelie already identifies.

- Methods which contain only some duplication from each other.

I'm not sure what Towelie identifies here. I know it looks at the ParseTree,

but the specs show only exact duplicate methods. It could be extended to find exact

duplicated regions fairly easily.

- Something harder to find (but worthwhile, in my opinion) would be duplicate

code which is only a part of a method, but which is not exact).

- Duplication of result, where the methods may be doing

the exact same thing in a different manner. We can easily check return values of two functions

given the same input over a few discrete cases to assign probabilities of duplication. We can

also compare state of potentially affected objects.

Doing so would amount to comparing member variables of objects who were passed in to the

method as well as the object the method belongs to (checking if changes to each were made, and if so, are they the same changes?).

Limiting to that type of analysis would be doable and not very time consuming.

Duplication of Results

Giles correctly pointed out that determining "duplication of results" is fairly easy, and people

are already doing that in the test generation world:

You mean you want to give two methods the same input and then

determine if they return the same output? That part is easy, you can

do that with a code block which auto-generates tests or specs.

...

Regarding the auto-generated testing, you could just throw the kitchen

sink at legacy methods and see which ones barf. E.g.

lambda{maybe_this_takes_a_string("why not")}.should_not raise_error

And I think that code would be both useful and funny, like flog or

heckle. Weird how testing tools can be witty. But I don't think that

would necessarily get you output you could actually do very much with.

I'm not convinced of the kitchen sink approach in unDRY detection either.

If you send everything you can think of or find, then the time complexity is no longer polynomial,

growing combinatorially with respect to the number of methods, the number of arguments, and the number of types

in the system.

Given enough time, it would work.

But since you're calling each method with each combination of arguments possible from the space of

all objects, my best premature optimization guess is that it would get intractable for the

usage I'm interested in.

I don't

necessarily care for generating tests or finding duplicate code within seconds

or milliseconds, but lower minutes would be a requirement, potentially as part of a build process.

Instantaneous would be awesome for running as part of my test suite (the one I run every few minutes)

but I expect code duplication to be entered slowly, so running it less frequently might not be a

problem. I'd rather run it every time, if possible though. After all, I heard something good about

TATFT.

To get it where I think it would be most useful, you'd need to do some static analysis

to help narrow down the type of arguments that can be sent to a particular method. Doing so may provide

some clues. However, what might be more interesting is building a dynamic observer to see

what happens when objects are created and their methods are run (would tell us what types it can accept).

I don't have any idea how I'd go about doing either of those things, but an idea Giles floated was to hack

Rubinius for doing the dynamic observation. It would be worth looking

at if you agree that finding "duplication of results" and limiting the running time are important.

Methods with Partial Duplication

In its first release, Towelie only detected entirely duplicate methods. I figured it would be easy enough

to extend its usage of ParseTree to dig a bit deeper and find parts of methods that were duplicated.

Asking Giles about it, he agreed and went in a little more depth about

the challenges (I added emphasis and formatting):

I'm probably going to have Towelie go inside methods

and find duplicate bits of code. Was just looking at that today, in

fact. But: can't guarantee it'll work, and the drawback is that you've

got these trees, if you go recursive enough you'll be comparing them

on the element-by-element level, where you'll find craploads of

duplication which is utterly meaningless. So extracting useful

information is the tricky part there.

Duplicated methods are just a

nice easy place to start - obviously if you have exact duplicates in

your code base, the next step there from a DRY perspective is easy. In

addition to extracting duplicate blocks, I also want Towelie to be

able to recognize that the methods in its current test data only

differ by one literal value. That's actually relatively easy - you can

do recursive tests for equality, collect the differences, and then

determine whether the differences represent literals. No problem.

"Easy" in the developer sense, of course, which translates in real

life to "theoretically possible and I have a vague plan."

Finding near-duplicate code fragments within a method - if I get the

other stuff working it may become possible to find this, currently

it'd be a shitload of work.

That problem of noise brings up the question:

what do we consider duplication?

If I have a method "return x+y" versus one whose body is just "x + y" should I consider that as

repetition? In the case one one liners, I'd say yes. But would I say in-line addition is

repetition in a general sense? Probably not.

I'd consider counting the numbers of consecutive lines, or counting distance from each other

in determining if something is duplicated. You could normalize it by dividing by the length of the

smaller method, or perhaps something more complex.

Heuristics such as these can help in determining what is duplicate, and in finding interleaved or "almost" duplicate code.

I wouldn't expect our DRYer to identify things that use

(0..(arr.length-1)) {...} versus

arr.each_index. On the contrary, I was thinking more like the code is duplicated by copy and paste,

but where the codepaster introduced a new variable in that frame as well.

Putting the question to you all

How important is the

DRY principle to you? Does

repetitive code warrant having a tool to report its existence, or are you and your team doing just fine without it?

Most importantly, how would you go about detecting duplicated code, especially if you were to programmatically try to do it?

(Note on the title: The opportunity for three "tions" in a row could not be passed up for the DRYer title of

"Ideas for (Repeti + Detec + Automa) * tion and The Importance of DRY" (assuming the Distributive Property of Strings holds))

Hey! Why don't you make your life easier and subscribe to the full post

or short blurb RSS feed? I'm so confident you'll love my smelly pasta plate

wisdom that I'm offering a no-strings-attached, lifetime money back guarantee!